The BMAT will be sat on the 18th October 2022 rather than the usual first week of November. The test has also changed to pen and paper.

The 2020 BMAT sitting faced many technical issues due to the change in format from previous years.

Last year, students sat the BMAT, and based on the number of students who had applied to university ahead of the October 15th deadline for medicine and Oxbridge, it looks likely that number will grow to around 15,000 students this year.

A majority of these students will be opening their BMAT results today, with their chances of a place at medical school at stake on the results of this highly competitive test. Unfortunately, it seems as though very few of these students had an entirely painless experience with the exam.

We surveyed over 900 students, over 96% of whom took the test online at test centres, with only a few taking the test in its traditional paper format.

We’ve also written an article explaining the 2020 results in-depth. Click here to read more.

How Many students had issues with the BMAT in 2020?

Over 80% of students reported problems with the test, many of them technical glitches that seem to have made the test far more challenging than intended.

The test consists of multiple-choice questions, the first on general problem solving and verbal reasoning, the second on scientific knowledge, before an essay section.

Across the first two sections, students attempt 59 questions in 90 minutes, an average of 90 seconds to answer each one.

What Kind of issues did students face?

More than a third of the students we spoke to found questions too slow to load, with diagrams failing to appear, or loading so slowly that they only had half the time to attempt the question.

A lot of the questions, especially on section 2, took around 15 seconds to load. For each of the questions this accounted for a lot of time lost. I hope they take this into account. This was similar for section 3, where it lagged a lot. It took time for my words to go through and to remove words. Because of this, I had less time to check through my work.

Student applying for UCL.

Invigilators also seem to have been poorly prepared for the test, with a third of students complaining that they weren’t given the full time to complete the test due to problems at the test centre.

Invigilators also were underprepared to deal with the software, with numerous glitches with pop ups, browser extensions, and camera tracking being reported by applicants, as well as anti-cheating messages being displayed to students who were struggling with the technology.

The camera software was glitching, so I was only in the test for about 4 minutes and was only able to answer 2 questions. The test repeatedly said that I was moving out of frame or navigating away, which I wasn’t doing which lead to it ending my test. I was so focused on not moving that I wasn’t able to focus on the questions and had to read one of them about 3 times. This is no way to sit such an important test.

Student applying for Cambridge & Imperial.

Students from one test centre report being asked to attempt the test using older computers and out of date browsers, which Cambridge Assessment specifically warned against using, while other simply found laptop batteries running out, as well as reports of a power cut, and several centres losing their internet connection mid test.

My test centre provided Chromebooks for us to sit the BMAT, despite Cambridge Admissions Testing’s advice against using Chromebooks as they are “unstable.” I began the test and it immediately shut off with a pop-up telling me that I had stopped screen sharing when in fact, I had not. Consequently, I had to go through the registration process again whilst fellow test-takers were speeding through section 1.

Student applying to Cambridge & UCL.

I did not have access to my extra time I am entitled to and was told only two days before that I would not be able to have extra time. I had been practising with extra time during the half term and had used up all the papers. as a result, I could not practice a paper before the test without extra time. As well as this demo link was never sent to me.

Student applying to Imperial & Keele.

What could students do after facing technical issues?

Students and test centres were given a week after the test to report issues to Cambridge Assessment, but given the scale and breadth of problems, it is hard to see how grades can be adjusted in a way that is fair while also making the test results meaningful.

How will universities use the BMAT results?

Universities may choose to more heavily weight interview in their admissions processes, but may find it difficult to increase the number of students they interview sufficiently to make up the difference. Oxford, for example, interviewed 23% of Medicine applicants in the last admissions cycle – if half of their applicants are predicted three A*s, have equally good records at GCSE, and similarly winning personal statements, how will they choose which half to reject without meeting them?

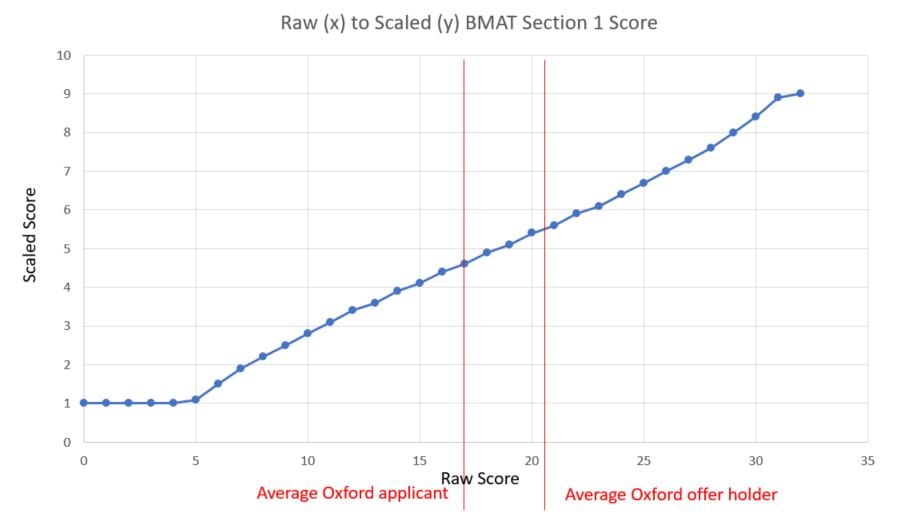

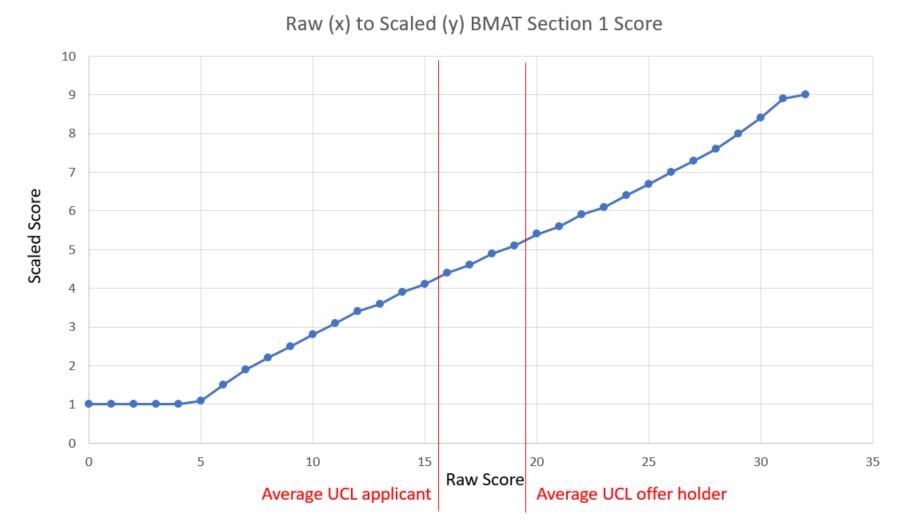

In the last admissions cycle, only 12% of students who applied to study medicine were successful, with BMAT results being the clearest indicator we have of how these admissions decisions are made. An average Cambridge applicant for Medicine applies with 4.6, 4.9, and 3.2 in the three sections of the BMAT, while the average successful applicant scores almost a full point higher with 5.5, 5.9, 3.4. The gap at UCL is even smaller, with only four marks between the average applicant and average offer holder scores.

While these differences seem stark, they can be as small as four correct answers in each section, a gap easily created by graphs that barely load or a flashing warning that you’ve been flagged for cheating.

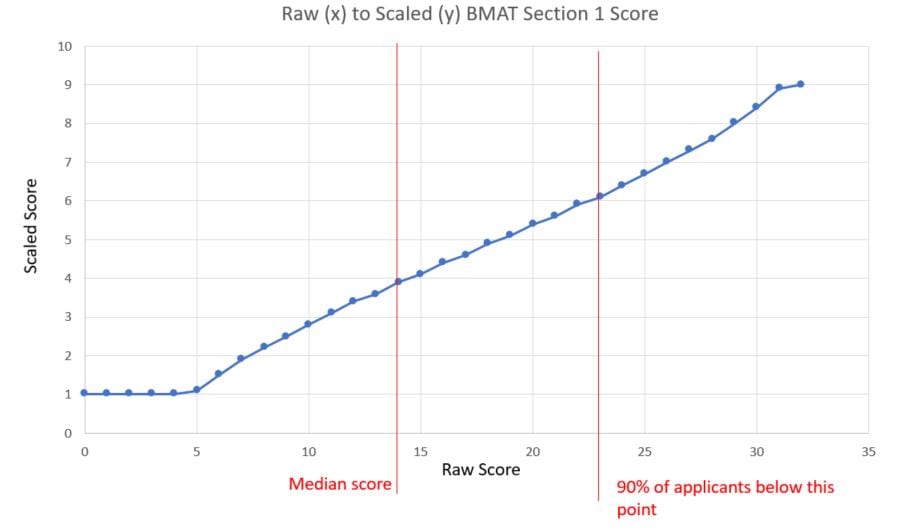

To consider this in greater detail, if we look at the distribution of applicants in Section 1, we see that 40% of applicants, those who score between the median and the top 10% are separated by only eight marks. In effect, each extra mark moves an applicant five percentage points up the distribution, moving from the top half to the top third in just three correct answers.

The gap is even smaller when we look at Oxford, with the difference between 17/32 and 20/32 accounting for the whole of the difference between average scores for applicants and offer holders.

Because of the time-pressured nature of the test, and the fine margins involved in scoring, students would be forgiven for worrying that technical issues mean that their BMAT score won’t be a true measure of their potential.

Our Final Thoughts

The BMAT was introduced in 2001 to help universities to distinguish between otherwise equally qualified applicants.

If, as seems likely, only a minority of students were able to take the test as intended, then it is useless as a tool for identifying talent. Students whose chances at medical school, and a career caring for us all in the NHS, have been dashed deserve more from an education system that seems determined to let them down at every opportunity in 2020.

Want to get a deeper analysis of the BMAT 2020 scores?

Join us at our BMAT results webinar! This session will be focused entirely on BMAT results, with expert analysis and information for you to take away.

To join, register with the link below.